In recent years, the topic of fairness in algorithms has received a great deal of attention. As algorithms become part of the decision-making process in situations ranging from medical diagnoses to setting credit limits, important questions are being asked about whether these algorithms operate in fair ways. Criminal legal decision-making is one situation in which the use of algorithms has come under particular scrutiny. Algorithmic risk assessment instruments have been used for decades by judges and other legal actors to inform decisions made in court (Gottfredson & Moriarty, 2006).

Researchers interested in examining questions about the fairness of algorithms often turn to the same publicly available dataset of one algorithmic risk assessment instrument, the COMPAS, that is used in some jurisdictions across the United States (Blomberg et al., 2010). In many studies, researchers evaluate the algorithmic risk assessment instrument for fairness across subgroups of people defined by race. While definitions of fairness differ across studies, researchers generally consider fairness in this context to mean that the algorithm predicts outcomes, typically recidivism, with the same accuracy or the same amount of error across groups. Sometimes, researchers make changes to the algorithm (e.g., changing the item content or weighting), changes to the data (e.g., including or excluding people from the sample), or changes to the outcomes being predicted (e.g., rearrest vs reconviction) to see if those changes improve the fairness of predictions.

Researchers often generalize their findings from their investigation of one algorithmic risk assessment instrument to all risk assessment instruments used at all stages of the criminal legal system. However, the criminal legal system is incredibly complex and each risk assessment instrument is designed to be used on a specific population of people to predict a specific outcome. When evaluations of the fairness do not consider these complexities or take risk assessment instruments of out their context, the results from such studies may not be meaningful to real world courtrooms or worse, they may call for changes that would actually be harmful.

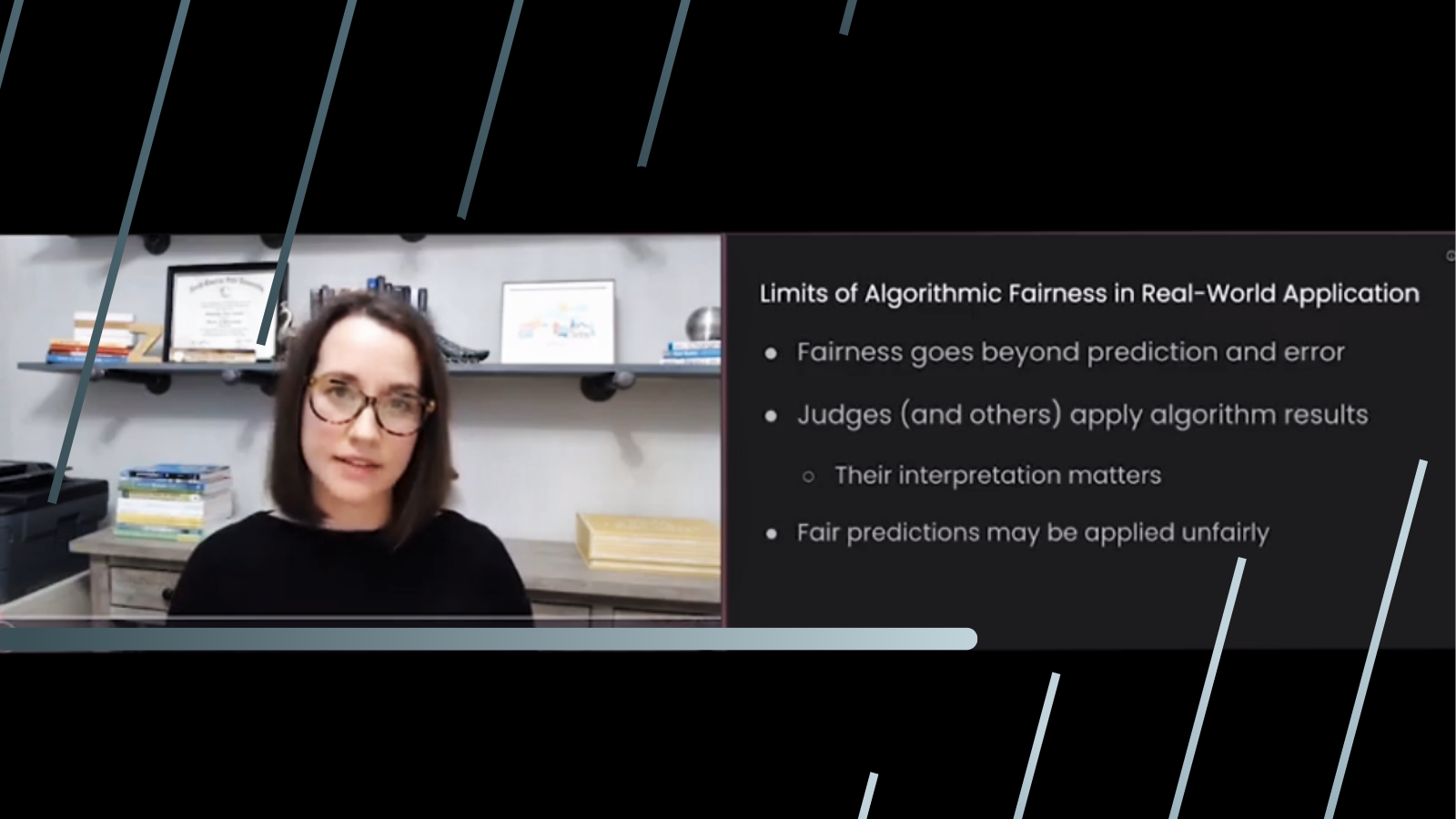

In a paper titled “It’s COMPASlicated: The Messy Relationship between RAI Datasets and Algorithmic Fairness Benchmarks” an interdisciplinary group of researchers argue for the importance of considering context when evaluating the fairness of algorithmic risk assessment instruments. The video below is a brief clip from a presentation of this paper given at the 2021 Neural Information Processing Systems (NeurIPS) Conference in the Datasets and Benchmarks Track. This clip outlines three points made in the paper that researchers should consider when they evaluate the fairness of algorithmic risk assessment instruments:

- Focusing only on fairness in prediction and error is not enough to ensure that fair decisions are made based on the estimates of risk produced by the algorithms

- When researchers study algorithmic risk assessment instruments, they are contributing a discussion about the values at play in decisions made in the criminal legal system.

- Researchers should engage with work from the fields of Psychology and Criminology that originally created algorithmic risk assessment instruments and that continue to train people who use the instruments.

To watch the full talk, click below.

References

Blomberg, T., Bales, W., Mann, K., Meldrum, R., & Nedelec, J. (2010). Validation of the COMPAS risk assessment classification instrument. College of Criminology and Criminal Justice, Florida State University, Tallahassee, FL.

Gottfredson, S. D., & Moriarty, L. J. (2006). Statistical risk assessment: Old problems and new applications. Crime & Delinquency, 52(1), 178-200. https://doi.org/10.1177/0011128705281748