Should We Fear Technology?

“There is a great danger of coming to rely so entirely on the electric button and its slaves that the wheels of initiative will be broken…,” a 1916 quote by Dorothy Fisher, an educator and activist who did not think highly of the effect that buttons would have on society. Throughout history, people have tended to mistrust new technologies, but high technologies tend to have an increased bit of mystique around them. We can effortlessly intuit how a wheel works and why it helps to make motion easier. But it is more difficult to understand how a vehicle may be able to drive itself, leading to trepidation about that type of technology. This is not new. In the 17th century, Blaise Pascal (of Pascal’s Wager fame) created the “first” calculator, called the Pascaline, with the capacity to slowly add up to eight digits. Social feedback to the Pascaline was like that of the electric button (which came later); people were suspicious of a machine that could count by itself. When the inner workings of a thing are opaque to us, we tend not to trust it, which many experts believe is rooted in our fear of the unknown. In the past, this fear has led to real-world action, like the Luddites of the 18th century, who were so worried about getting their textile jobs taken by the technology of weaving machines that they enacted violence on the weaving machines themselves. Disruptive technologies like stone tools, steam engines, motor vehicles, the internet, smartphones, and artificial intelligence cause significant shifts in the ways that we live.

This Generation’s Electric Button

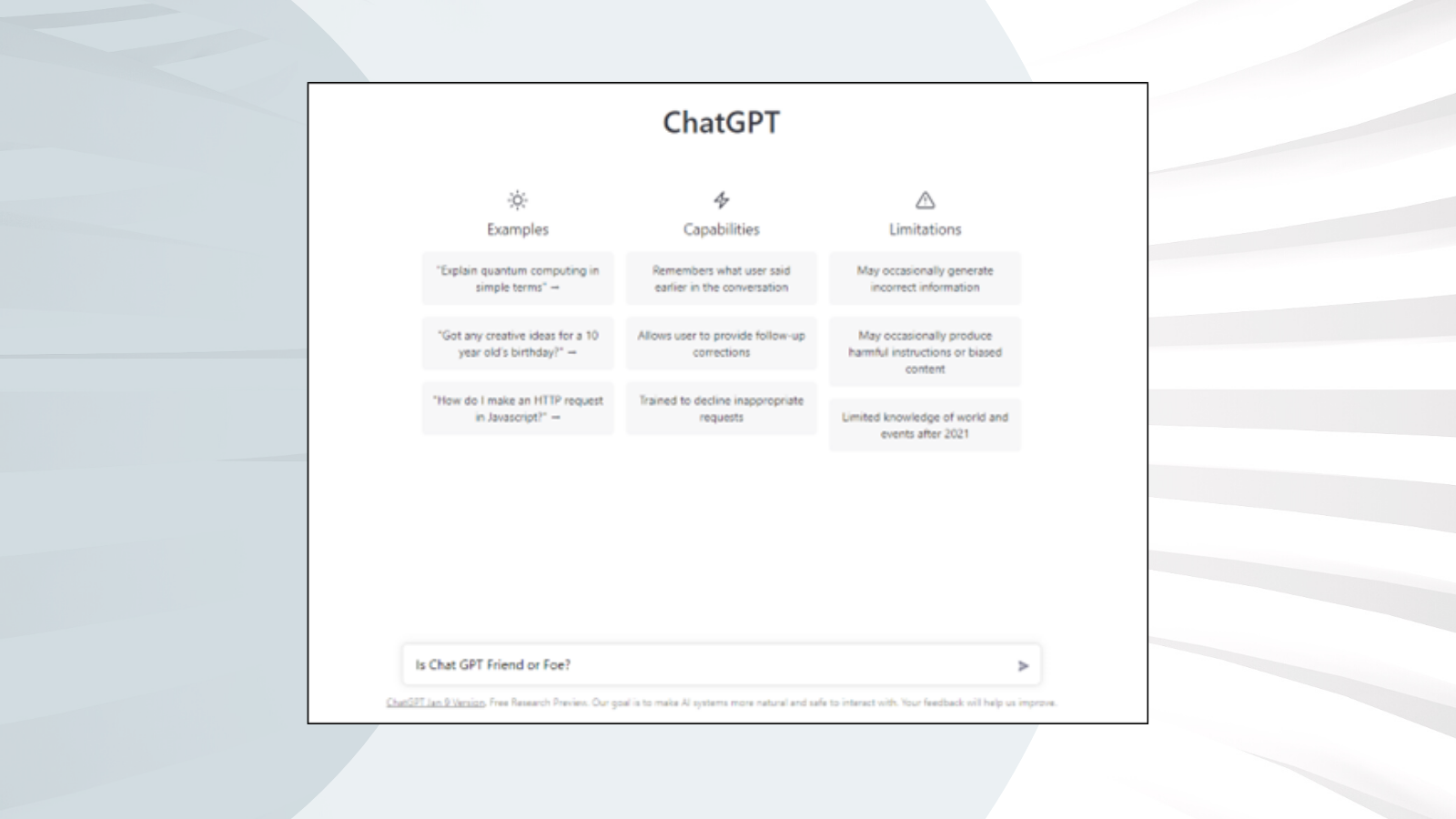

Four hundred years after the first calculator, we now have ChatGPT, which is a state-of-the-art chatbot capable of carrying out natural language generation (the “G”) that was pre-trained (the “P”) on a very large, unlabeled data set using a transformer architecture (the “T”). Essentially, it is based on a deep neural network that learns to predict the probabilities of certain types of responses based on the use of language across the entire internet. Early natural-language processing models were mainly used for classification and prediction, e.g., “is this email spam or not?” Generative models allow for the development of novel statements based on prompts, e.g., “write a haiku, in the voice of Abraham Lincoln, about the state of U.S. politics today” (*see below for AI response). This, of course, brings about new fears of what is happening behind the curtain to render such human-like behavior. The ability to generate novel information by learning from prior information is what we do as humans; however, ChatGPT can do it orders of magnitude faster.

To imagine a very (very) basic picture of a deep neural network, think of a (very complex) decision tree where nodes in the tree are a series of linked logistic regressions making predictions based on upstream outcomes of prior regressions. The models use algorithms called backpropagation and forward propagation to navigate through the tree. Once a final answer is established, it goes backward through the decision tree to determine which factors did and did not help it to achieve its correct answer. Then it adjusts the parameters between the linked nodes of the tree to make a better prediction the next time. Each parameter is like a dial that allows the model to finetune itself to know the difference between a zero and the letter “O.” For example, based on observed examples, ChatGPT has approximately 175 billion parameters. This is the learning of machine learning. The bottom line is that ChatGPT is based on a computational model for turning language into numbers, then identifying and generating patterns across them. Information is about patterns, and patterns are all around us in the way we speak, where we shop, how often we drive, etc. New technologies can help us uncover hidden patterns in our behavior that lead to mental health issues or those that preserve social inequities so that we may address them.